AWS DeepLens and Soracom Cellular IoT

When the end of November comes around, I always think of Las Vegas. Yes, there’s Thanksgiving too, but for the past six years I’ve also been making the pilgrimage to the desert with the rest of the AWS community for AWS re:Invent!

And every year, the community keeps growing. When I first attended in 2012 as an AWS Solutions Architect, 6,000 developers attended and the entire conference could fit inside the Venetian. This year, attendance reached 43,000 and AWS re:Invent sprawled across five hotels up and down the Strip.

That dramatic growth reflects the equally dramatic expansion of AWS. In 2012, we were still mostly talking about computing and database services. This year, the main topics were machine learning and IoT, and so many new services were launched around the technology space that I can’t possibly cover everything in one blog entry!

That said, one of the most compelling new services is AWS DeepLens, a hardware and software package designed to help developers get moving quickly with deep learning. As soon as Andy Jassy announced DeepLens in his Day 1 keynote, I immediately checked the official re:Invent app to confirm that DeepLens would have not only a dedicated breakout session but several workshop sessions as well.

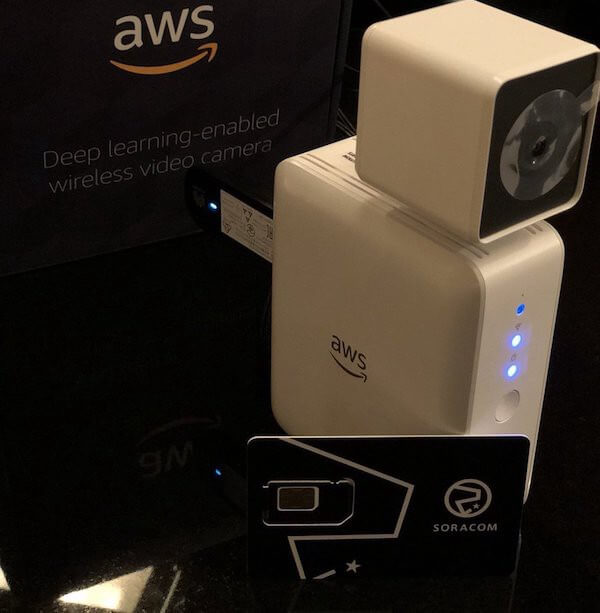

Since this wasn’t my first re:Invent, I had a good idea what that would mean. Using the strong business judgment and good instincts developed during my AWS career, I connected the dots from the Amazon Fire Phone and Amazon Echo Dot provided at previous shows and… cut to the chase, I came away with this!

AWS DeepLens

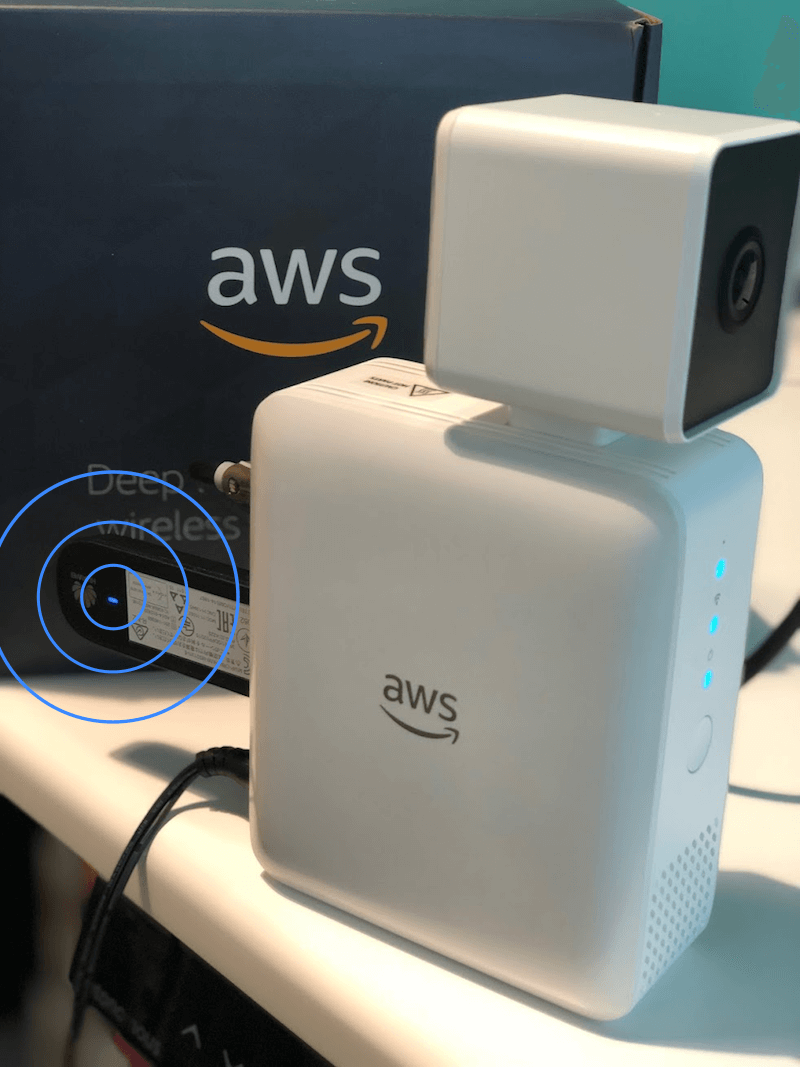

Cute, right? This is the AWS DeepLens device. It has a built-in camera and GPU, WiFi and USB ports, and runs Ubuntu Linux. It ships with the AWS Greengrass service and a configuration tool to register itself to AWS DeepLens service. (You can learn more about all that here: https://aws.amazon.com/deeplens/)

There are a bunch of sample, pre-trained models provided, along with sample projects using the models. Once you configure the device to connect to WiFi and register it to your AWS account, you can just pick a project, deploy to the device, and get rolling. So easy.

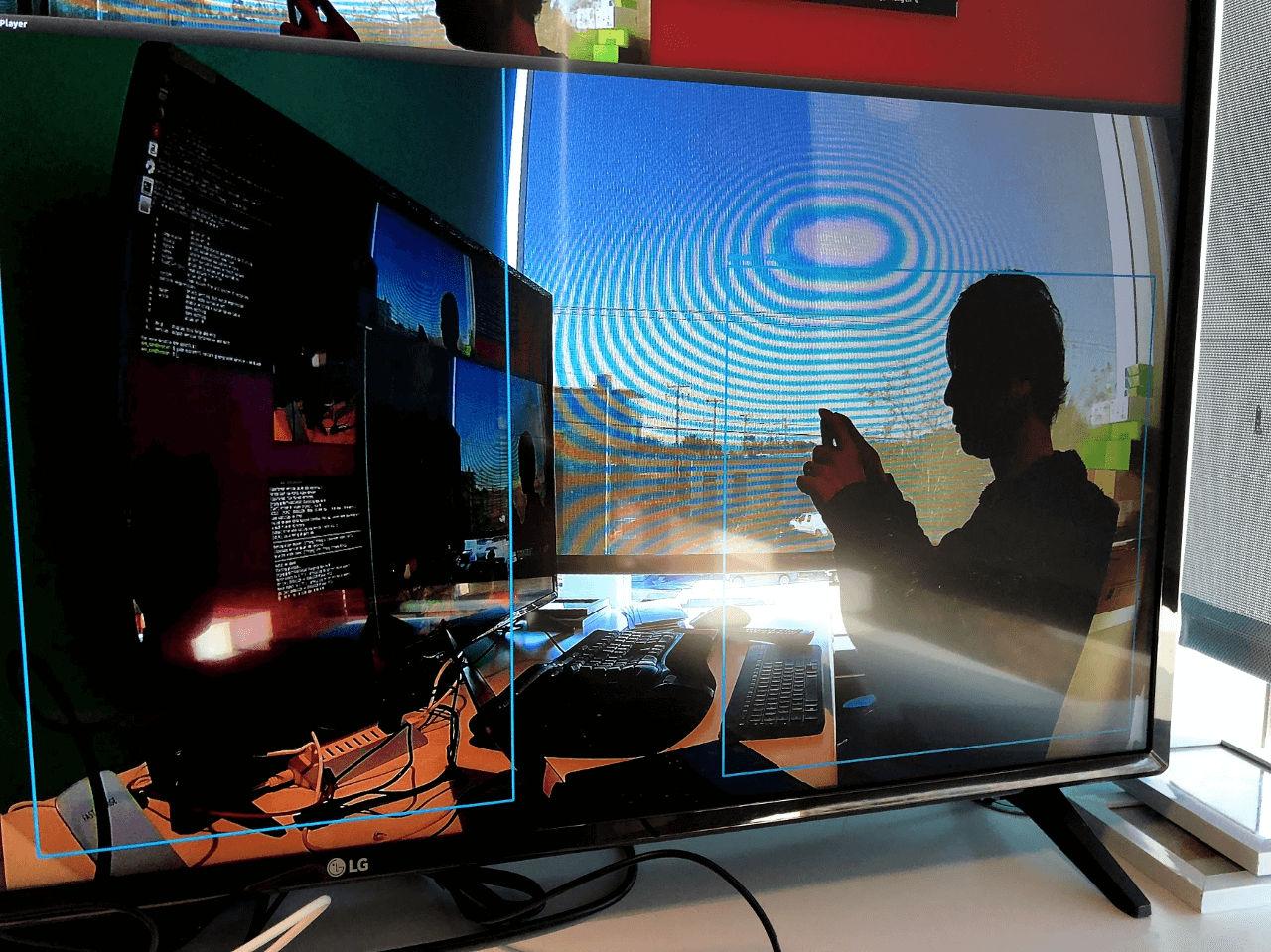

To get started, I put the DeepLens camera just to my left and ran the Object Detection project.

The blue rectangles indicate that the model downloaded onto the DeepLens device (executed on Greengrass service as a Lambda function) and successfully detected me and the TV monitor as objects.

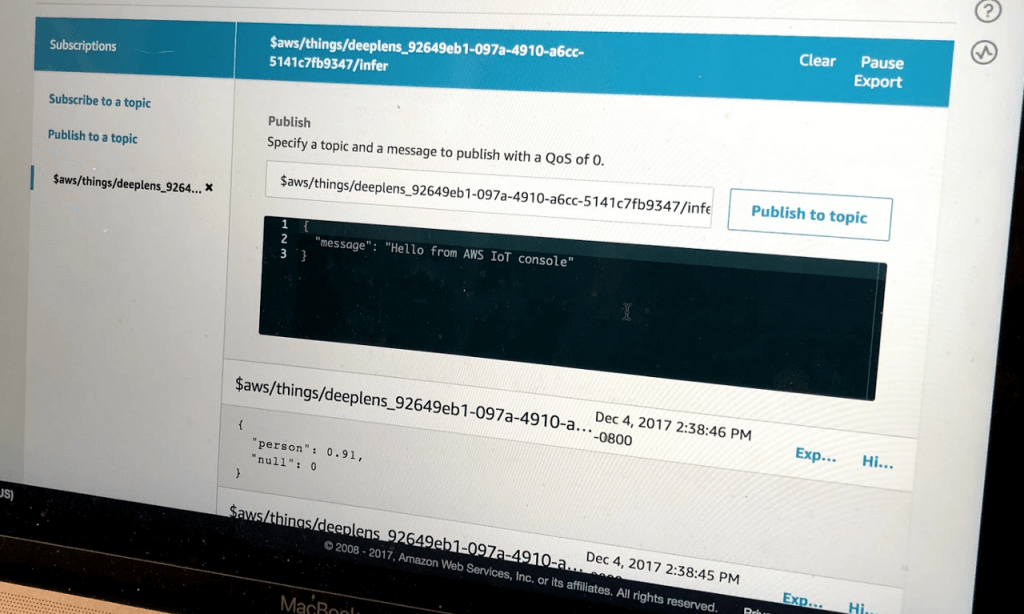

When an object is detected, the code executed on the DeepLens device publishes the information to an AWS IoT topic via MQTT.

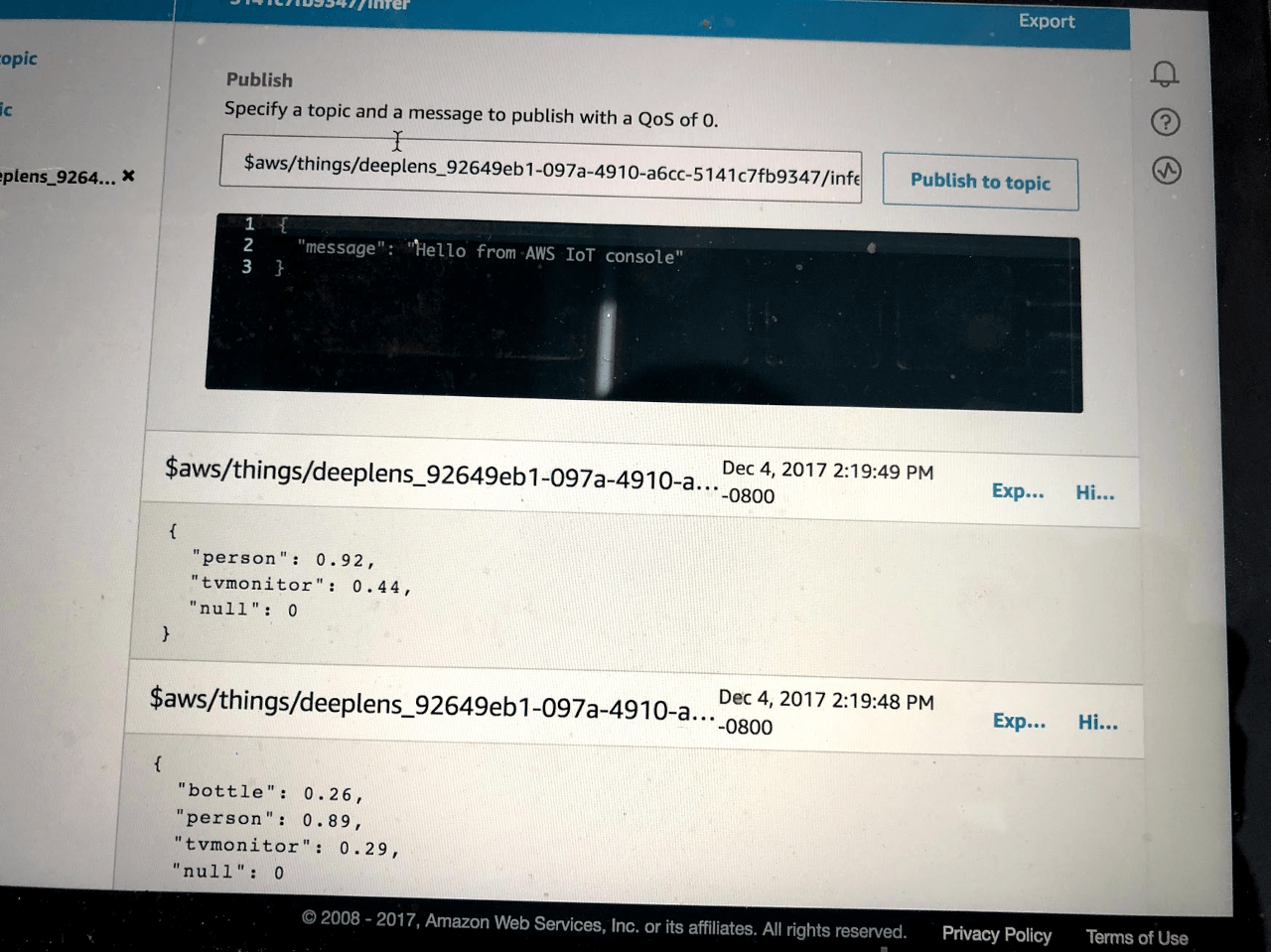

MQTT client subscribed to AWS IoT Topic

In my case, Object Detection reported a person and a tvmonitor with 92% and 44% probabilities, respectively. That means my e-body could fool their model into recognizing me as a human being. Score!

Kidding aside, this is pretty great. It lets any developer run machine learning algorithms for their applications while AWS handles the undifferentiated heavy lifting so they can focus on their applications and business logic. As Werner Vogels emphasized in his Day 2 keynote, this has always been the core philosophy of AWS. Working with DeepLens, I feel that AWS is opening the door to let developers dive into a new world, just as they did by making infrastructure available for software engineers to control via API.

Since we launched the SORACOM platform, the team here has maintained a similar philosophy: we solve the issues common to IoT so our users can focus on their applications and business logic instead of spending cycles on the undifferentiated heavy lifting of connectivity, security and device management.

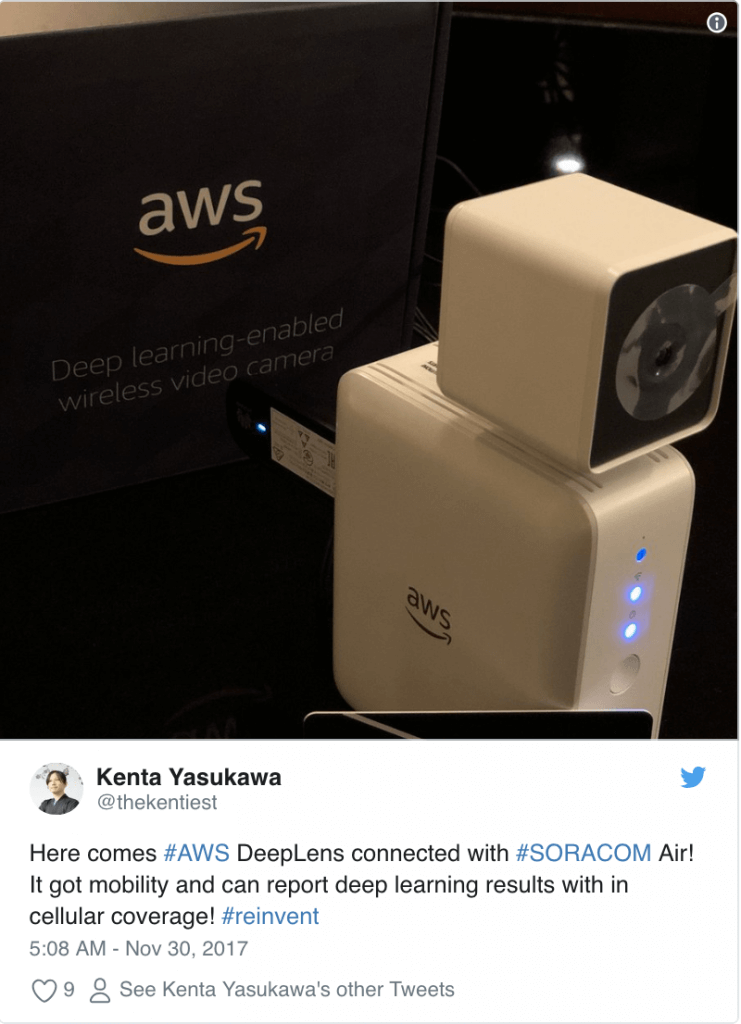

So what can we add to this particular case? One obvious use case is to add SORACOM Air to give AWS DeepLens a new cellular connectivity option. That’s exactly what I did right after I got the device and went back to my hotel room:

…

Taking DeepLens mobile with SORACOM Air

Back home in California, I did it again at my office. This time I can walk you through.

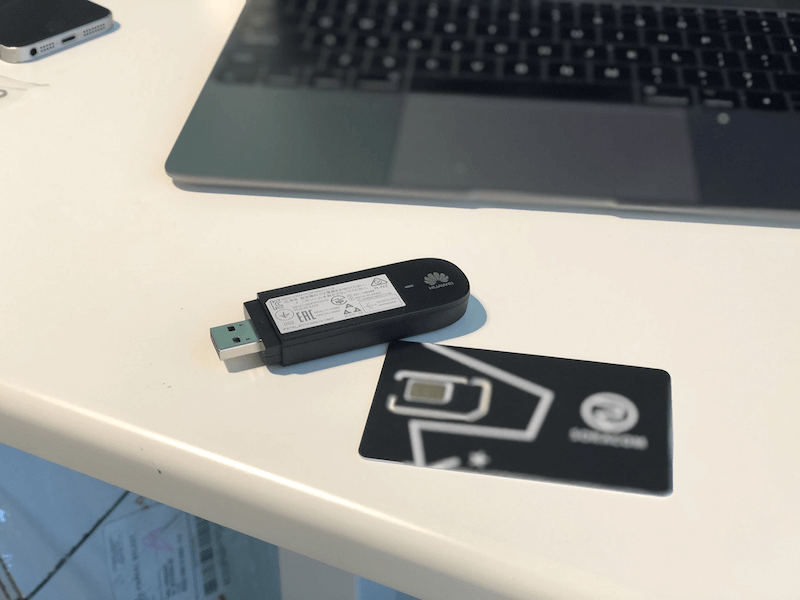

First, I grabbed a USB cellular modem and a SORACOM SIM card (available on Amazon.com)

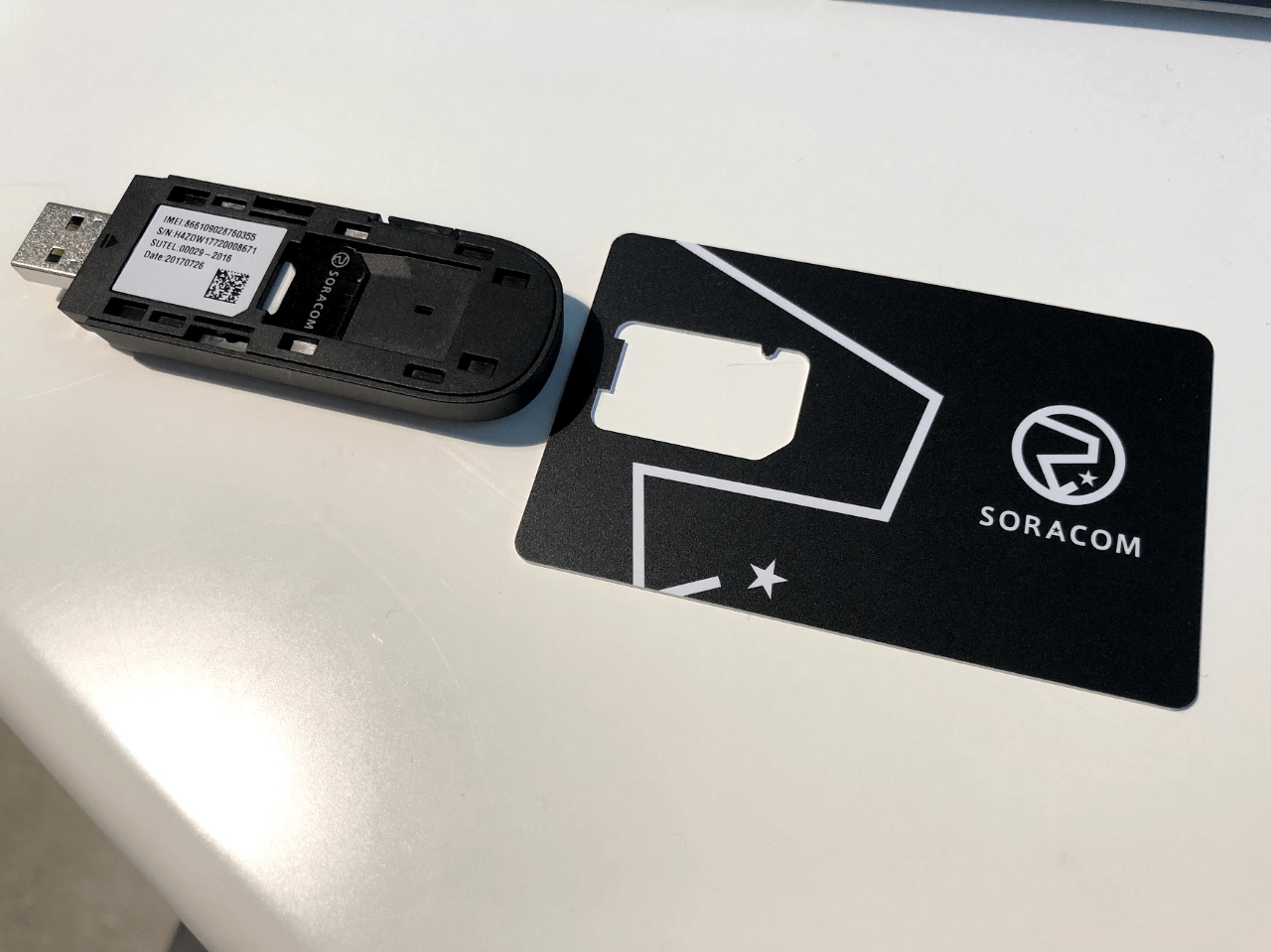

2. I popped out the SIM card and inserted it into the USB modem,

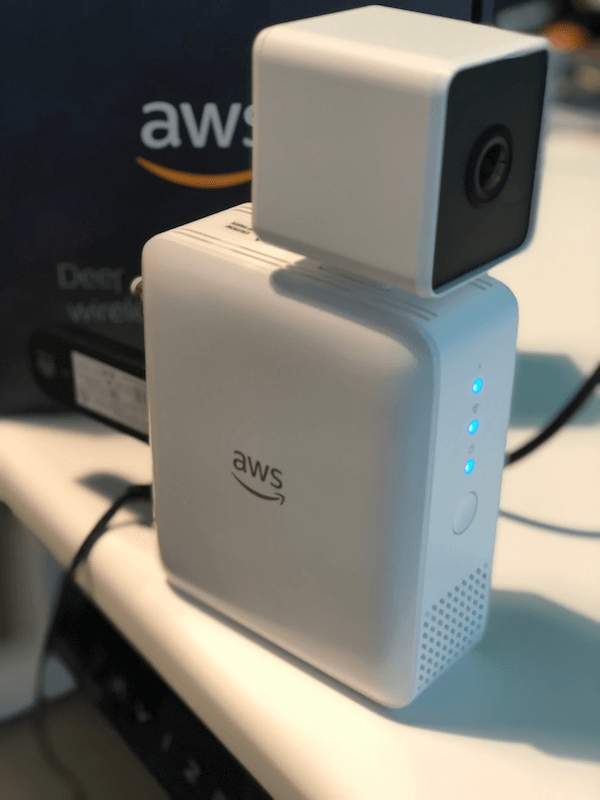

3. I connected the USB modem to AWS DeepLens

AWS DeepLens with USB 3G modem

At this point, the modem was not yet connected. But no worries. We don’t have many steps from here to get your device connected.

1. First, we ask the OS to detect the USB device as a modem. In case of the Huawei MS2131 that I used here, an appropriate driver is included in Ubuntu Linux running on DeepLens device. We just need to run the following command to let the OS recognize the dongle as a modem rather than a mass storage device.

$ sudo usb_modeswitch -v 12d1 -p 14fe -J

(12d1 is for Huawei, 14fe is the product code for MS2131 and -Jis for running Huawei specific procedure. You may need to find right values for your modem.)

2. Next, we configure the network manager to dial up with the right APN when a modem is detected.

$ sudo nmcli con add type gsm ifname “*” con-name soracom apn soracom.io user sora password sora

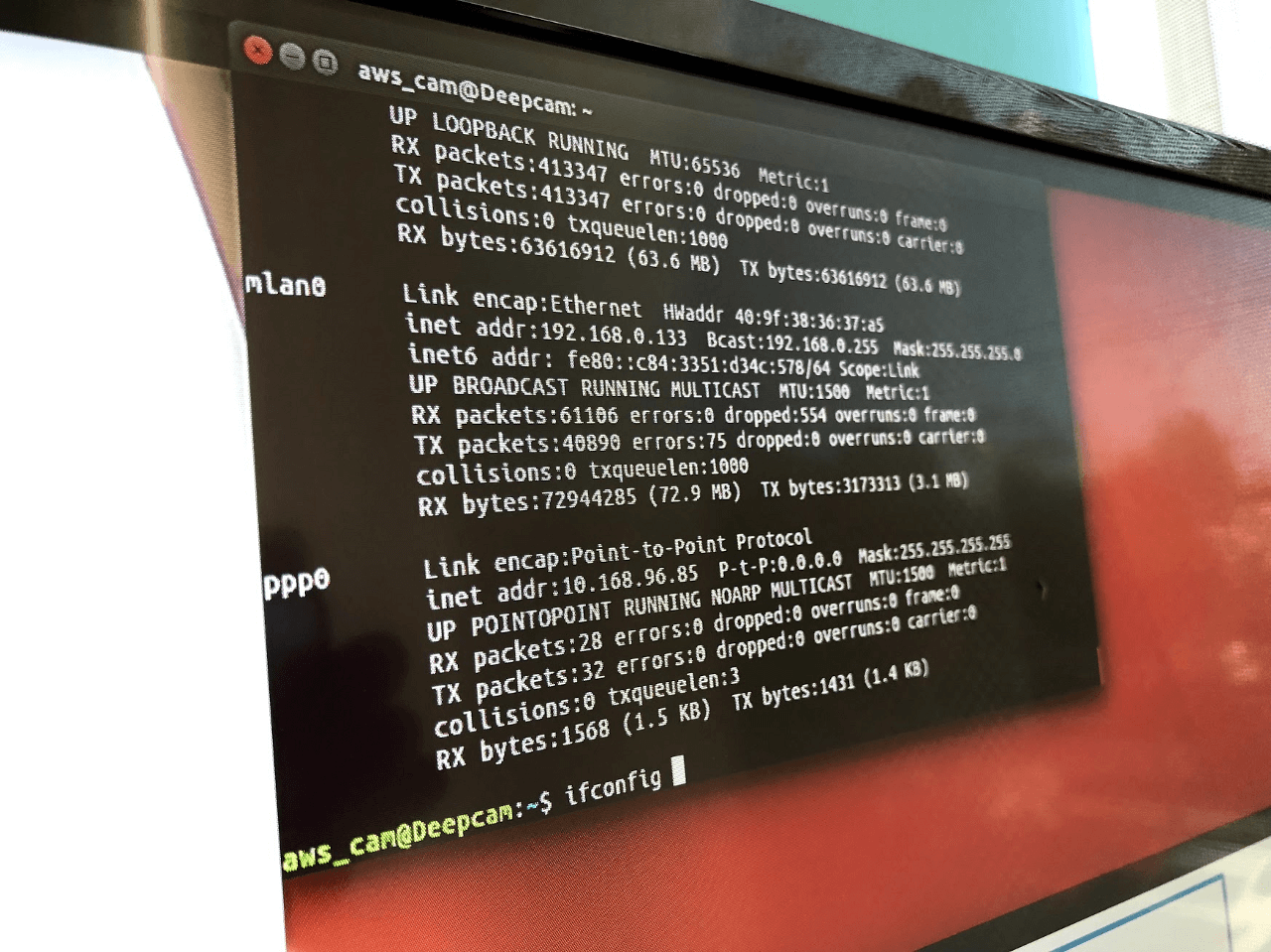

Is that it? Yes, that is it. Here is the proof:

AWS DeepLens with active 3G connection!

See the difference? The steady blue LED on the USB dongle indicates that the modem has established an over-the-air IP connection.

Here is another proof that is more obvious:

Yes, ppp0!! It got a new interface that corresponds to the cellular link. Now it has dual links to reach to AWS IoT endpoint, one is wifi ( mlan0 ) and the other is cellular link with SORACOM Air ( ppp0).

This means that my AWS DeepLens device now has mobility. I can deploy this device anywhere as long as I have power and cellular coverage. WiFi is great as long as devices are static and inside a hotspot. As most IoT developers know, this is not always a case we can count on.

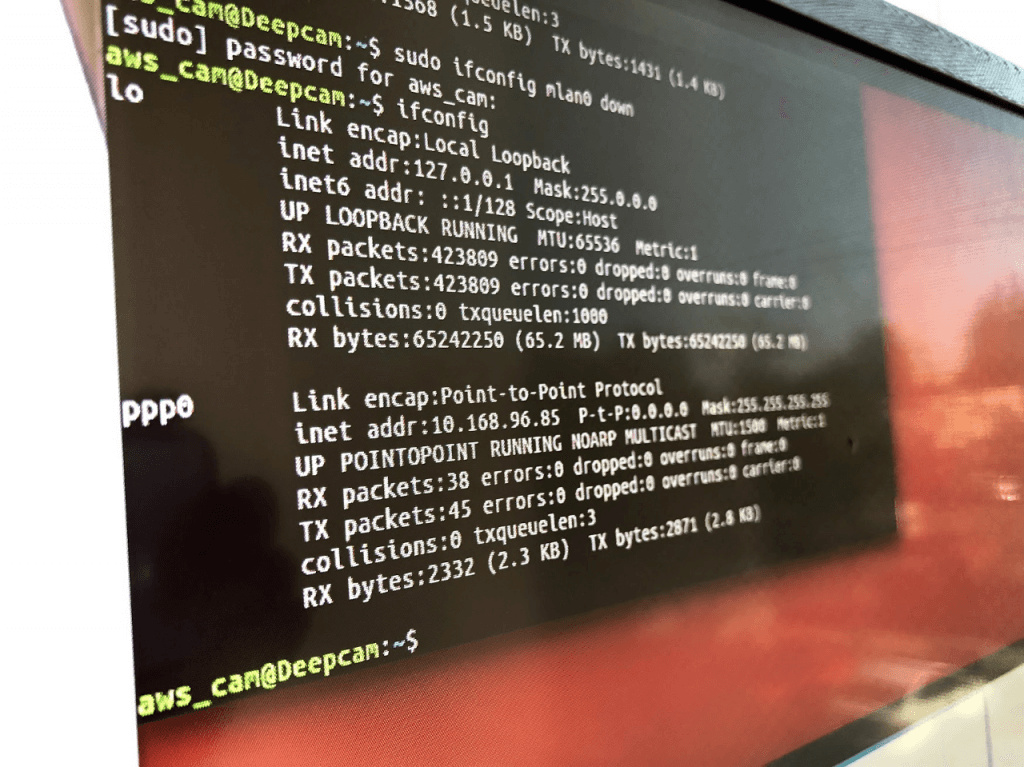

To emulate a situation where WiFi coverage is not available, I brutally typed sudo ifconfig mlan0 down on my console.

Poof. The WiFi was gone. The device now has access to wireless data only via cellular.

That was not a problem and the AWS DeepLens device reported its inference result!

Since DeepLens is a kind of edge computing device and talks to the cloud only to report inference results, the bandwidth offered by a cellular modem is good enough. The combination of AWS DeepLens and SORACOM Air gives developers a feasible solution for implementing intelligent, cutting edge IoT applications.

How else can SORACOM help IoT developers?

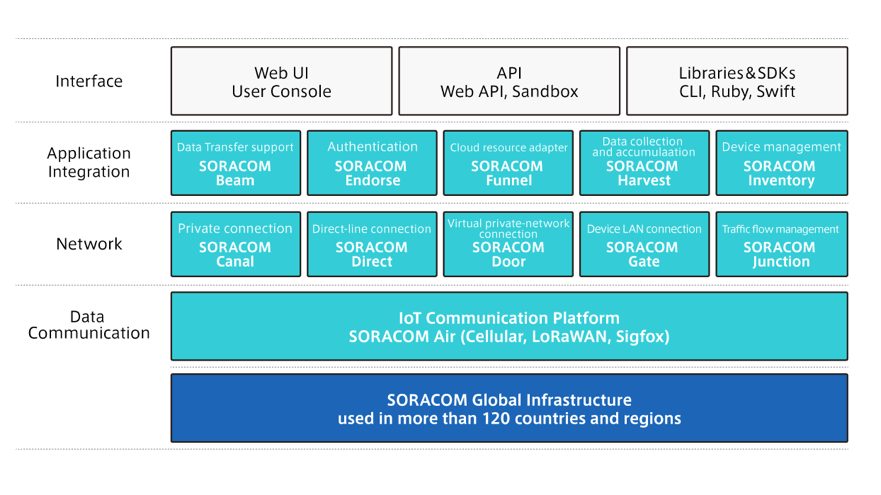

What I showed here is just one example of the IoT connectivity features available using SORACOM. In addition to the SORACOM Air connectivity service, we also offer network and application layer integration services.

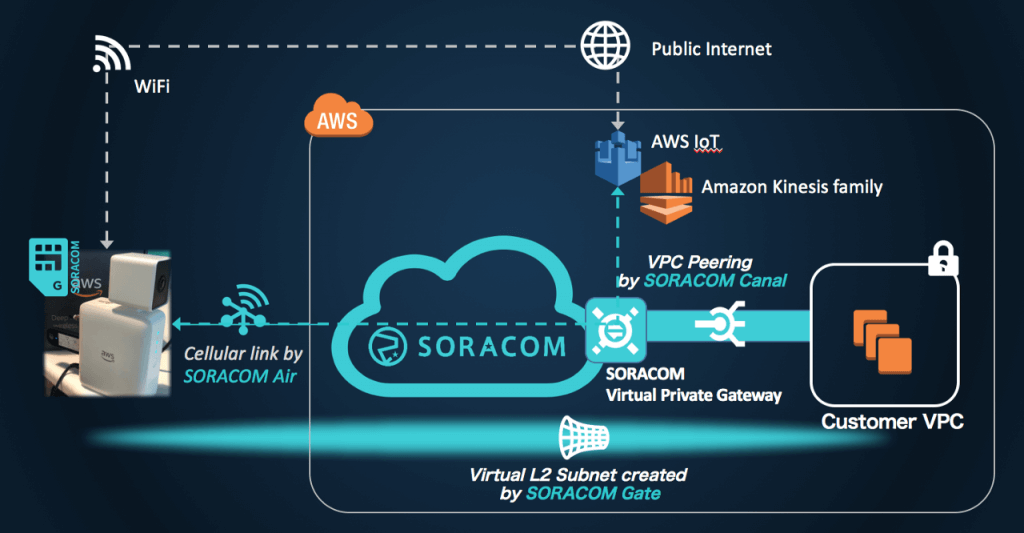

For instance, you can use our network layer services to build a private, dedicated network for your cellular-connected devices and your servers. This lets you isolate your devices from the public internet and still access them (e.g. via SSH) at your convenience.

You can also use SORACOM Canal to establish a private VPC peering link from our infrastructure to your AWS VPC or use SORACOM Gate to create a virtual L2 subnet for your devices and servers and let them communicate with each other as if they were on the same LAN.

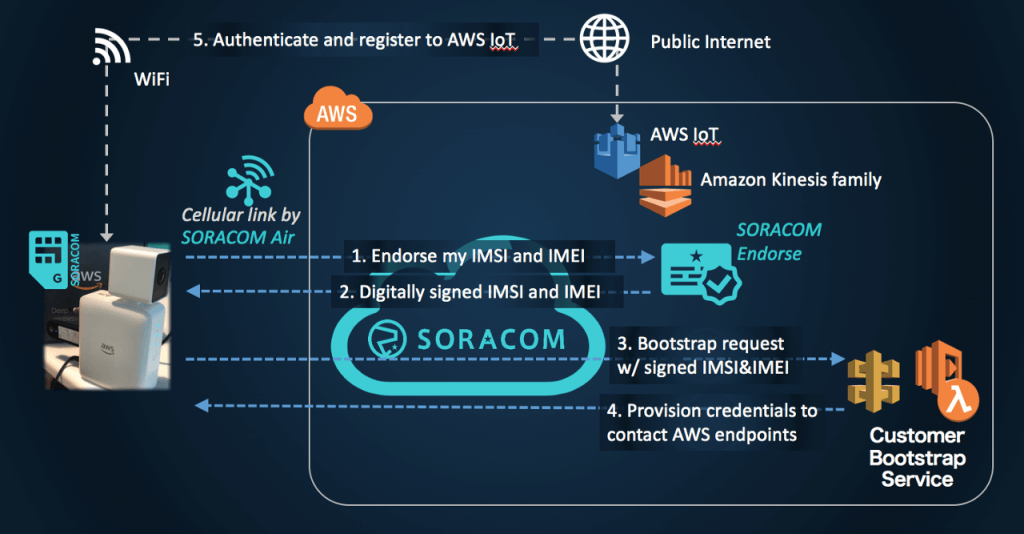

You might also leverage our application integration services to simplify device management. In reality, you’d need to provision at least one set of credentials for each IoT device so it can bootstrap and/or authenticate to a backend service such as AWS IoT and AWS DeepLens.

For example, SORACOM Endorse issues a digital certificate that assures a client has a particular combination of IMSI (identity inside SIM) and IMEI (identity unique to each modem) so you can authenticate a device. SORACOM Beam proxies requests from a device to your server with adding the device’s IMSI and IMEI to the headers

Last but definitely not least

We created SORACOM to offer cloud-native IoT connectivity as a service so our customers can focus on the fun part of IoT: building cool applications. We learn about challenges in IoT as our customers build applications on top of our platform, and we fuel our own innovation by listening actively to customer feedback.

If you want to build something that connects real-world objects or things, Sign up! You create, we connect.